Moreover, U.S. financial firms hesitant to use AI for surveillance face a knot of regulatory, technical, and cultural challenges that slow adoption. Furthermore, AI surveillance in finance promises efficiency gains, and yet many institutions remain cautious because of explainability gaps, legal exposure, vendor concentration risks, and privacy worries. In addition, financial firms AI hesitancy is as much about avoiding catastrophic mistakes as it is about seizing opportunity, and therefore this article unpacks why that hesitation exists — and how students and future practitioners should think about it.

Table of Contents

ToggleExecutive summary (so you know what to expect)

First, this blog explains what AI surveillance means in a financial context. Second, it lists the concrete benefits that make AI tempting. Third, it analyzes the many reasons U.S. financial firms hesitant to use AI for surveillance — including regulatory ambiguity, model explainability, data privacy, operational risk, vendor concentration, and potential for bias. Fourth, it examines real-world signals from regulators and industry bodies about governance expectations. Finally, it offers practical steps and study pointers for students and junior professionals who want to work responsibly at the intersection of AI, compliance, and finance. Across the article, the phrase AI surveillance in finance appears repeatedly because it is a central topic, while financial firms AI hesitancy will be referenced when we discuss human and organizational factors.

What do we mean by “AI surveillance in finance”?

Firstly, AI surveillance in finance broadly refers to the use of artificial intelligence — including machine learning, natural language processing, and generative models — to monitor transactions, communications, trading patterns, customer behavior, and other signals for compliance, fraud detection, insider trading, market abuse surveillance, anti-money laundering (AML), and operational risk detection. Secondly, surveillance applications range from anomaly detection on trading desks to automated review of employee chats and voice recordings. Thirdly, while such systems can detect patterns humans miss, they often operate as complex, opaque “black boxes,” which is a major reason U.S. financial firms hesitant to use AI for surveillance are slow to deploy them.

Importantly, these systems are used for high-stakes decisions: freezing accounts, escalating to enforcement, flagging a trader for investigation, or producing evidence that regulators may review. Consequently, the technical strengths of AI come with governance burdens that many institutions find hard to accept without strong guardrails.

Why firms are attracted to AI surveillance (briefly)

Moreover, before we dig into the reasons for hesitation, here’s why firms consider AI surveillance at all:

- Efficiency gains: AI can process huge volumes of data — trade records, chat logs, emails, voice transcripts — far faster than humans, reducing manual triage time.

- Improved detection: Machine learning models can surface subtle or complex patterns that fixed-rule systems miss.

- Cost scaling: Once developed and validated, automated surveillance scales more cheaply than manual review teams.

- Continuous monitoring: AI enables near-real-time detection across many channels simultaneously.

- Risk prioritization: AI models can help prioritize investigations by predicted risk, helping compliance teams focus scarce human resources.

Nevertheless, the decision to deploy AI is not solely technical; it’s deeply regulatory, legal, and reputational.

Key reasons U.S. financial firms are hesitant to use AI for surveillance

However, the adoption of AI for monitoring and surveillance in the U.S. financial sector has been cautious. Below are the core reasons why U.S. financial firms hesitant to use AI for surveillance — explained in detail.

1. Regulatory uncertainty and supervisory risk

Firstly, regulators in the U.S. have signaled both interest in and wariness of AI. Secondly, firms fear that adoption without crystal-clear supervisory expectations will expose them to exam findings, enforcement actions, or litigation. For example, FINRA and other agencies have made clear that existing rules (on supervision, recordkeeping, and compliance) apply to AI the same way they apply to other tools, but guidance continues to evolve and firms worry about shifting expectations.

Moreover, the U.S. Treasury and other policy bodies have solicited input on AI risks in financial services, emphasizing consumer protection, data quality, and systemic stability — signals that regulators may escalate scrutiny. Consequently, firms worry they could be penalized for deploying imperfect AI or for failing to properly supervise third-party models.

Therefore, regulatory uncertainty remains a heavyweight factor in financial firms AI hesitancy: firms would rather move slowly and compliantly than adopt a new technology that could invite fines or reputational damage.

2. Explainability, model risk, and auditability

First, AI models — particularly large neural networks and ensemble models — often produce outputs that are not easily explainable in human terms. Second, when surveillance outcomes lead to consequences (e.g., escalation for enforcement), institutions must explain why a particular alert was raised. If they cannot, they risk noncompliance, unfair actions, or legal challenges.

Furthermore, model risk management frameworks in banks and broker-dealers were built for statistical models with well-understood behavior. However, contemporary AI models may be ill-suited to existing validation techniques; as a result, governance teams hesitate to give these models direct decision-making authority. Explainability challenges have been widely flagged in industry discussion and model governance circles.

3. False positives, alert fatigue, and human trust

Moreover, when an AI surveillance system produces many false positives, compliance teams become overwhelmed, which undermines trust in the tool. Consequently, firms prefer high-precision systems even if they miss some edge cases. In practice, existing machine-rule systems are tuned conservatively; replacing them with a new AI system risks immediate operational pain and backlogs, discouraging rapid deployment.

4. Data quality, privacy, and legal limitations

First, AI depends on high-quality labeled data. Second, data in financial firms is often siloed across legacy systems, subject to privacy laws, and restricted by contractual terms (including third-party vendor data). Third, training AI models on sensitive communications or personal data raises privacy and regulatory questions — especially when models may infer sensitive attributes.

In addition, the legal basis for processing certain datasets (e.g., biometric voice data) for surveillance can be unclear and varies across jurisdictions. Therefore, data governance and privacy concerns contribute heavily to U.S. financial firms hesitant to use AI for surveillance.

5. Vendor risk and concentration

Moreover, many firms rely on a small set of cloud providers, AI platforms, and third-party model vendors. First, vendor-built AI can introduce supply-chain risk, and second, concentration can create systemic vulnerabilities if many institutions rely on the same model or dataset. Therefore, risk teams worry about vendor lock-in, limited control over model internals, and the inability to independently validate third-party models.

Additionally, regulators and policymakers have highlighted the risk of common-mode failures when many firms use similar AI systems, potentially amplifying market vulnerabilities.

Deepak Goyal CFA & FRM

Founder & CEO of RBei Classes

- 16,000+ Students Trained in CFA, FRM, Investment Banking & Financial Modelling

- 95% Students Successfully Placed • 94.6% Pass Rate In Exam

6. Legal liability and evidentiary standards

First, surveillance tools often create the evidence basis for enforcement actions, customer freezes, or internal discipline. Second, if an AI-based system is wrong, the firm can face lawsuits or regulatory sanctions for wrongful action. Third, proving that an opaque model operated properly — and that the firm exercised reasonable supervision — is harder than proving good governance for a rules-based system.

Therefore, legal teams are conservative and often push for human-in-the-loop architectures or augmented tools rather than fully automated decisions, adding friction to adoption.

7. Bias, fairness, and discrimination risks

First, biased training data can produce biased surveillance outcomes, disproportionately targeting specific groups or producing discriminatory outcomes. Second, even when bias is unintentional, the reputational and legal costs can be severe. Third, fairness auditing and mitigation are still evolving disciplines.

Consequently, compliance officers are wary of exposing their firms to discrimination risks through poorly understood AI systems, fueling financial firms AI hesitancy.

8. Cybersecurity, adversarial attacks, and model manipulation

Moreover, AI models can be manipulated through adversarial inputs, data poisoning, or model extraction attacks. First, surveillance models that ingest external data or user-generated text may be vulnerable to attackers seeking to evade detection. Second, adversarial threats create novel cybersecurity vectors that firms are still learning to defend against.

Therefore, the security posture required to safely operate AI surveillance is higher than for many legacy systems, increasing costs and slowing adoption.

9. Cultural resistance and talent gaps

First, risk and compliance cultures are inherently conservative — by design. Second, business stakeholders and technologists may disagree about acceptable trade-offs between risk and efficiency. Third, skilled AI risk practitioners (who understand model risk, compliance, and financial law) are in short supply.

Consequently, internal politics, training needs, and lack of cross-disciplinary talent slow the rollout of AI surveillance initiatives.

10. Cost, integration complexity, and legacy systems

Moreover, AI projects require investment in data infrastructure, model validation, monitoring, and ongoing governance. Integrating models into legacy surveillance pipelines — while ensuring audit trails and version control — is expensive. Therefore, the business case must be compelling to justify the upfront cost and downstream operations.

Regulatory landscape and supervisory signals (why guidance matters)

Importantly, several U.S. regulatory bodies and global standard-setters have already signaled expectations that shape firm behavior.

- FINRA has made it clear that existing supervisory rules apply to AI use and that firms should ensure systems are reasonably designed and supervised. FINRA’s notices and guidance repeatedly emphasize model risk, vendor oversight, and supervisory control.

- The U.S. Treasury has published requests for information and syntheses on AI use in financial services, underscoring potential consumer harms and the need for robust risk management frameworks. Consequently, firms watching those signals are cautious.

- Global bodies like the Financial Stability Board (FSB) have warned that AI could amplify certain systemic vulnerabilities, especially when many participants rely on similar models or datasets. This systemic lens increases prudence among regulated firms.

- Regulators have also taken enforcement actions that signal the risk of overstating AI capabilities or making misleading claims. For example, the SEC has acted against firms that claimed AI-driven investment processes when the reality was different, which sends a warning about “AI washing.”

- Senior policymakers, including Treasury officials, have publicly warned that AI introduces “significant risks” — adding to the incentive for firms to take a conservative approach.

Therefore, the message from regulators is clear: innovation is welcomed, but firms must maintain robust governance. As a result, many institutions delay full-scale AI surveillance rollouts until governance frameworks mature.

Real-world examples and signals from the industry

Furthermore, several concrete industry trends illustrate the cautious approach:

- Many large firms run AI in “shadow mode” for months or years: models generate alerts and predictions that humans review, but the outputs are not used for automated enforcement. This allows validation and calibration without operational risk.

- Some firms develop internal “centers of excellence” for AI to centralize governance, model validation, and vendor oversight. This prevents piecemeal adoption that could fail compliance checks.

- Others prefer hybrid systems: rule-based triggers plus AI-derived risk scores for prioritization — thereby keeping human judgment in the final decision loop.

- Finally, firms increasingly require vendors to provide model cards, documentation, and tighter contractual controls — reflecting elevated vendor governance practices.

Consequently, the industry trend is cautious experimentation plus heavy governance rather than rapid automation.

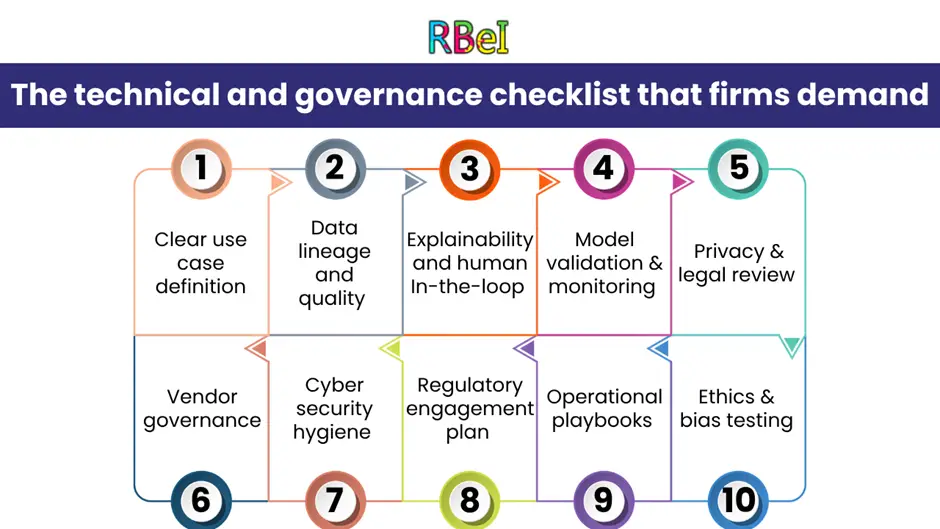

The technical and governance checklist that firms demand

However, for a financial firm to move from hesitation to deployment, many conditions typically must be satisfied. Below is a practical checklist that drives procurement and internal approvals:

Therefore, until firms can confidently tick most boxes, many will remain reluctant.

How firms mitigate risks while still using AI (practical patterns)

Nevertheless, the hesitancy does not mean firms avoid AI entirely. Instead, they adopt patterns that reduce exposure:

- Augmentation not automation: AI helps prioritize alerts or suggest investigations; humans make final decisions.

- Shadow/parallel testing: Running AI in the background to compare outcomes against current systems and collect evidence of benefit.

- Tiered deployment: Deploying AI first for low-risk tasks (e.g., data normalization) before migrating to higher-risk surveillance.

- Explainable models where possible: Using interpretable models (decision trees, rule-based ML) for frontline decisions and reserving complex models for behind-the-scenes risk scoring.

- Robust vendor oversight: Demanding transparency, source code review, or dedicated audit access in vendor contracts.

- Regulatory cooperation: Engaging early with regulators to demonstrate governance and request feedback.

Therefore, smart adoption emphasizes risk-reduction while leveraging AI’s efficiency gains.

Classroom corner — What students should learn and practice

Moreover, if you’re a student studying finance, compliance, data science, or law, here are practical takeaways that will make you valuable to employers navigating AI surveillance in finance:

- Learn the regulatory framework: Understand FINRA rules on supervision and recordkeeping, SEC enforcement precedents, and high-level Treasury/Federal guidance. Regulatory literacy is essential.

- Study model risk management (MRM): Know validation, backtesting, explainability techniques, and how to document model lifecycle governance.

- Master data lineage and privacy basics: Learn how data is collected, labeled, stored, and secured. Also, study data-privacy laws and contractual constraints.

- Practice explainable AI (XAI) techniques: Familiarize yourself with feature importance, SHAP, LIME, rule extraction, and model cards — and know their limitations.

- Understand bias and fairness: Learn how to audit datasets for demographic skew and implement bias mitigation.

- Acquire interdisciplinary fluency: Combine technical skills with regulatory, legal, and business understanding.

- Simulate real-world projects: Build a mini surveillance project — e.g., anomaly detection on synthetic trade data with audit logs and a documentation pack — to demonstrate end-to-end governance awareness.

- Learn vendor oversight and procurement basics: Know what to demand from vendors (documentation, audit rights, SLAs).

- Keep up with supervisory guidance and case law: Regulatory expectations change; habitually monitoring guidance will keep you job-ready. Therefore, students who couple technical competence with regulatory and governance know-how will be in high demand.

Policy and industry recommendations (how to reduce hesitancy)

Furthermore, reducing financial firms AI hesitancy is a multi-stakeholder task. Below are practical policy and operational recommendations:

- Clearer supervisory expectations: Regulators should publish concrete, use-case-specific guidance that clarifies acceptable documentation and validation standards.

- Standards for explainability: Industry groups can co-develop interpretability standards and model-card templates for surveillance models.

- Open benchmarks and datasets: Curated, privacy-respecting benchmark datasets for common surveillance tasks would help vendors and firms validate models comparably.

- Vendor transparency initiatives: Encourage model provenance documentation and third-party audits to reduce vendor opacity.

- Regulatory sandboxes: Expand sandbox programs that allow supervised experimentation with AI surveillance without full regulatory exposure.

- Cross-industry collaboration: Share anonymized adversarial attack case studies and defense patterns to bolster collective security.

- Workforce development programs: Invest in training for compliance professionals on AI basics and for data scientists on regulatory constraints.

Consequently, these steps can shorten the prudential runway from experimentation to safe deployment.

A few cautionary case studies and enforcement signals

Moreover, understanding real enforcement examples helps illustrate how regulatory risk translates to firm behavior:

- “AI washing” enforcement: Enforcement actions against firms that overstated AI capabilities signal that regulators will police misleading claims — a reputational and legal hazard for firms.

- Warnings about GenAI and scams: Supervisory bodies have warned that generative AI can amplify fraud and scam sophistication, prompting firms to focus on defensive uses of AI before defensive automation.

- Public policy statements: Officials have publicly warned about systemic and consumer risks from AI, prompting conservative risk appetites at major firms.

Therefore, these signals push institutions to adopt slow, documented, and auditable approaches when using AI for surveillance or enforcement.

Trade-offs — What firms gain and what they risk

However, it’s helpful to think of the decision as a trade-off:

- Gain: Better detection, operational efficiency, faster investigations, and the ability to process new data types (voice, video, social media).

- Risk: Regulatory exposure, model failure, bias, mass false positives, vendor lock-in, adversarial manipulation, and reputational damage.

Consequently, the scale of the potential gain must justify the risk, and for many firms — especially smaller or regulated ones — the calculus favors incremental adoption.

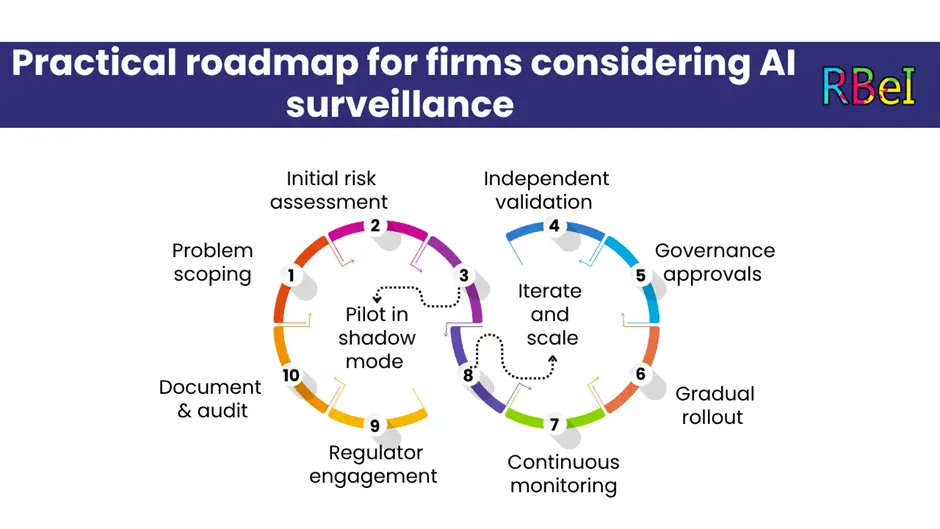

Practical roadmap for firms considering AI surveillance

Finally, here’s a practical step-by-step roadmap that firms can follow to move from hesitation to controlled deployment:

Therefore, this measured roadmap balances innovation with prudence.

Conclusion — Why the caution matters (and how it will change)

In sum, U.S. financial firms hesitant to use AI for surveillance are not resisting innovation out of fear of progress. Rather, they are balancing the promise of AI surveillance in finance against a complex mix of regulatory uncertainty, explainability challenges, legal liability, data privacy, vendor risk, and systemic stability concerns. Moreover, financial firms AI hesitancy reflects a risk-aware industry that must preserve market integrity and client protection while exploring powerful tools.

However, this cautious posture is not permanent. As regulators clarify expectations, governance practices standardize, vendor transparency improves, and firms accumulate safe-deployment experience (especially via shadow testing and sandboxes), adoption will accelerate — albeit in a controlled and audited manner. Therefore, students and practitioners who master the intersection of AI technical skills and regulatory governance will be the ones leading the next wave of responsible deployments.